It’s easy. Go subscribe to 𝑀𝑎𝑔𝑒𝑛𝑡𝑜2 𝐸𝑛𝑡𝑒𝑟𝑝𝑟𝑖𝑠𝑒 or 𝑆ℎ𝑜𝑝𝑖𝑓𝑦 and upload the catalog there.

Just kidding 🙃

Although it is a valid approach, things are not that simple. Any cloud platform takes their fee for using the platform. Often times these fees seem small when you are starting up but start to grow and pinch when the sales increase. As a benchmark, 𝑀𝑎𝑔𝑒𝑛𝑡𝑜2 today takes about 40 to 190 thousand dollars 💵 per year for their cloud solution plus a share in the revenue depending upon the sales on the platform. Ouch.

Now, coming back to the question, in this particular scenario it is far more convenient to build an in-house system. However, building an in-house e-commerce system of that magnitude is not cheap. This is why companies like Magento and Shopify thrive.

So, what’s the solution?

One solution is to leverage the community 𝑀𝑎𝑔𝑒𝑛𝑡𝑜2 system and scale it in-house. This is far cheaper and faster as compared to building everything from scratch. After all, 𝑀𝑎𝑔𝑒𝑛𝑡𝑜2 is awesome in terms of the capabilities it provides. The trick is to launch a community Magento 2 system and scale its individual components upon need.

This is what we did for one of our clients who had a similar problem statement and wanted to build a similar system-

Step 1- Launch a Magento 2 community edition on a single server on AWS and make it live. Easy. This took us about a week to achieve.

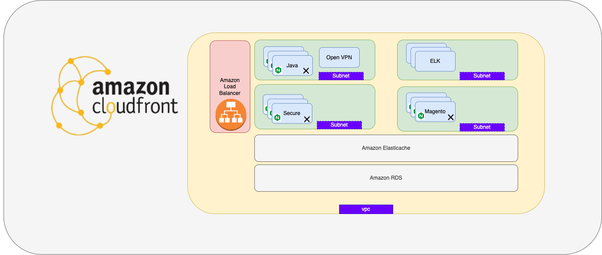

We used 𝐴𝑚𝑎𝑧𝑜𝑛 𝐶𝑙𝑜𝑢𝑑𝑓𝑟𝑜𝑛𝑡 as our global CDN for caching static assets like images, videos, javascript, and CSS files. We also did setup a small 𝐽𝑎𝑣𝑎 microservice for OTP-based authentications since Magento does not support it by default.

Within a week of the launch, we started seeing high CPU spikes on the database which is MySQL in the case of Magento.

Step 2- We decided to move MySQL out of the Magento server and host it separately. We decided to use 𝐴𝑚𝑎𝑧𝑜𝑛 𝑅𝐷𝑆 instead of a self-managed one. Why? The entire activity took less than 30 minutes. Time is money guys. It took 5 minutes for Amazon to spawn a new RDS and then a 25-minute downtime for us to migrate the existing data to RDS. And since we were using Amazon RDS, we do not have to worry about backups and downtimes, and scaling out. And guess what we did this a day before black Friday. Our first black Friday went great. No jitters or fluctuations in the database.

99.9% of people stop here because, for a system to grow beyond a point where Amazon RDS becomes unscalable, they have to be on the scale of Amazon or Flipkart. However, we can technically scale beyond this threshold as well by using sharded databases or libraries like sharding sphere to shard an existing relational database. This requires comparatively more development time but more on that sometime later.

Everything was good but Christmas was nearing and our single server was constantly under stress due to a high number of concurrent users and sessions. Magento 2 by default stores sessions on disk and we wanted to scale out our single instance. This posed a problem for us because if we scaled out, sessions would be distributed across servers and due to the absence of sticky sessions, people would start facing session management issues in case their request goes to a server other than the one that established the session.

Step 3- We decided to use 𝐴𝑚𝑎𝑧𝑜𝑛 𝐸𝑙𝑎𝑠𝑡𝑖𝑐𝑎𝑐ℎ𝑒 instead of in-server Redis in Magento and migrate our session management and caching there. We also spawned an 𝐸𝑙𝑎𝑠𝑡𝑖𝑐𝑠𝑒𝑎𝑟𝑐ℎ cluster separately for our indexing needs. This allowed us to move our Magento servers into an auto-scaling group on AWS that scales on demand leveraging our fully baked AMIs.

This also meant that we have to write our custom deployment jobs that we did using 𝐽𝑒𝑛𝑘𝑖𝑛𝑠 and ansible. We also had to setup our own analytics pipeline to aggregate sales and other metrics that we did by parsing 𝑁𝑔𝑖𝑛𝑥 logs through an 𝐸𝐿𝐾 pipeline.

By this time we were doing about a million dollars in sales every month. Our default payment gateway started misbehaving for certain users by this time.

Step 4- We now decided to remove our checkout process into a separate microservice that allowed us to leverage multiple payment gateways based upon certain rules like success rates and payment fees for suitable transactions. We wrote a service in 𝑁𝑜𝑑𝑒.𝑗𝑠 and moved our entire checkout process to a new subdomain called 𝑠𝑒𝑐𝑢𝑟𝑒.𝑑𝑜𝑚𝑎𝑖𝑛.𝑐𝑜𝑚 for our client.

Step 5- An ever-increasing userbase now needed mobile applications that we created using 𝑅𝑒𝑎𝑐𝑡𝑁𝑎𝑡𝑖𝑣𝑒 and 𝑀𝑎𝑔𝑒𝑛𝑡𝑜2 APIs and launched them in under 1 month.

And now the system was finally ready for a million-dollar sale per day. Customizations are now comparatively easier and faster to implement and we are on a 100% open-source tech stack. This was how the system finally looked and it took us a little under 100 thousand dollars 💵 to reach here.